Solutions Partners

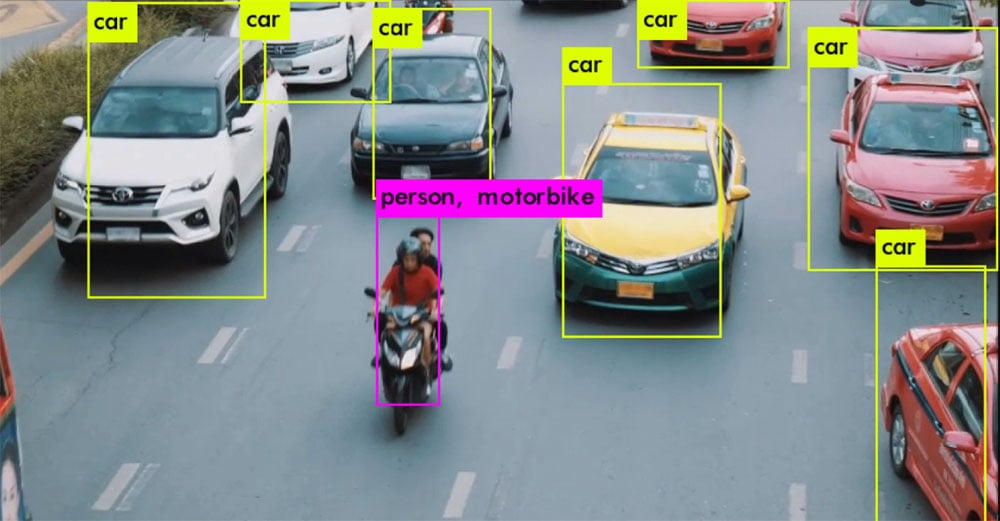

Our Partner Program ecosystem includes a range of AI/ML inference options, from next-gen ASIC-based cards to IP for development on FPGAs.

A lot has changed in a few years with AI/ML models and inference. The hardware that’s great at crunching training algorithms can fall behind in latency and utilization for real-time, batch size 1 inference. Deep learning models have gotten more complex, requiring new approaches for real-time applications to keep up.

The good new is, as machine learning has matured, acceleration technology has gotten smarter—and more efficient. These are both on a silicon level, such as using dedicated ASIC devices, and in a design approach—like using 8-bit integers for connections.

BittWare, a brand that’s been trusted for over thirty years to bring the best acceleration technology to market, has assembled an ecosystem FPGA- and ASIC-based AI solutions that are optimized for inference.

Whether it’s scaling up a CPU- or GPU-based system to the latest datacenter-grade tensor processor or getting every last watt of performance using an edge-focused solution, we have what you need to reduce risk and get to market faster.

Where do BittWare AI/ML customers deploy?

For some partners we are also supplying development platforms used to evaluate their technology

Our Partner Program ecosystem includes a range of AI/ML inference options, from next-gen ASIC-based cards to IP for development on FPGAs.

We look at the inference of neural networks on FPGA devices, illustrating their strengths and weaknesses.

Programming Stratix 10 using OpenCL for machine learning. Topics Covered: OpenCL, machine learning, Stratix 10.

Using variable precision in FPGAs to build better machine learning inference networks. Topics Covered: machine learning, application tailoring, Arria 10.