520N-MX PCIe Card with Intel Stratix 10 MX FPGA

PCIe FPGA Card 520N-MX Stratix 10 FPGA Board with 16GB HBM2 Powerful solution for accelerating memory-bound applications Need a Price Quote? Jump to Pricing Form

Run the same models & workloads you run today with lower latency and greater power efficiency

Want to accelerate your edge AI workloads? There are many options to get started, whether you’re in early-stage development or ready to go to volume production on a complex module.

Get the SAKURA-I chip on a low-profile PCIe card that's perfect for benchtop development. Includes MERA Compiler Framework and tools, with the DNA neural processing engine, embedded into SAKURA-I.

Includes MERA Compiler Framework and tools, the DNA neural processing engine IP, bundled with BittWare's PCIe accelerator cards featuring Intel Agilex 7 FPGAs. Tap here for more details on this solution.

With a power-efficient ASIC, customized cards or microelectronics modules are a perfect fit.

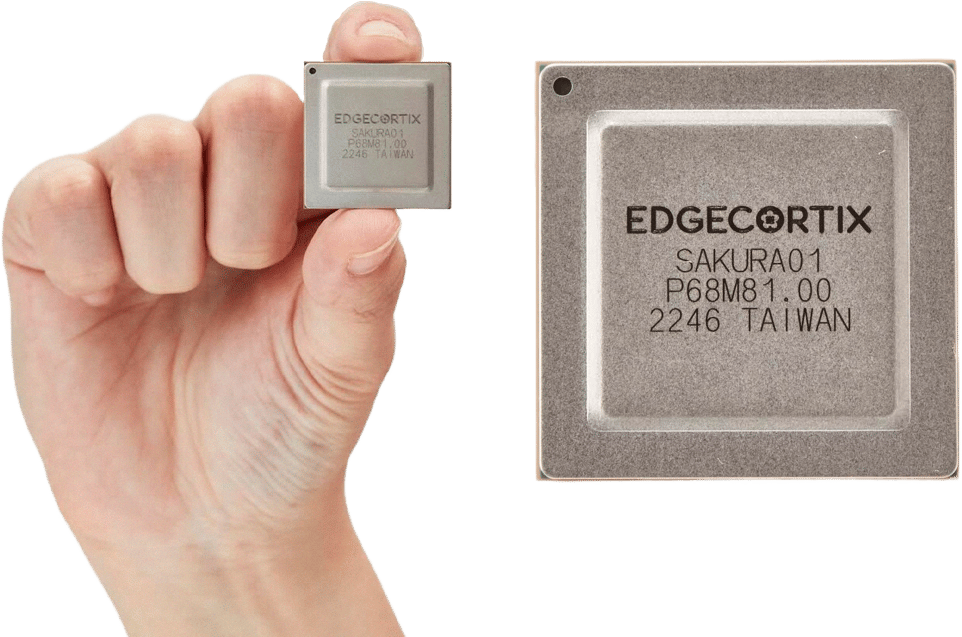

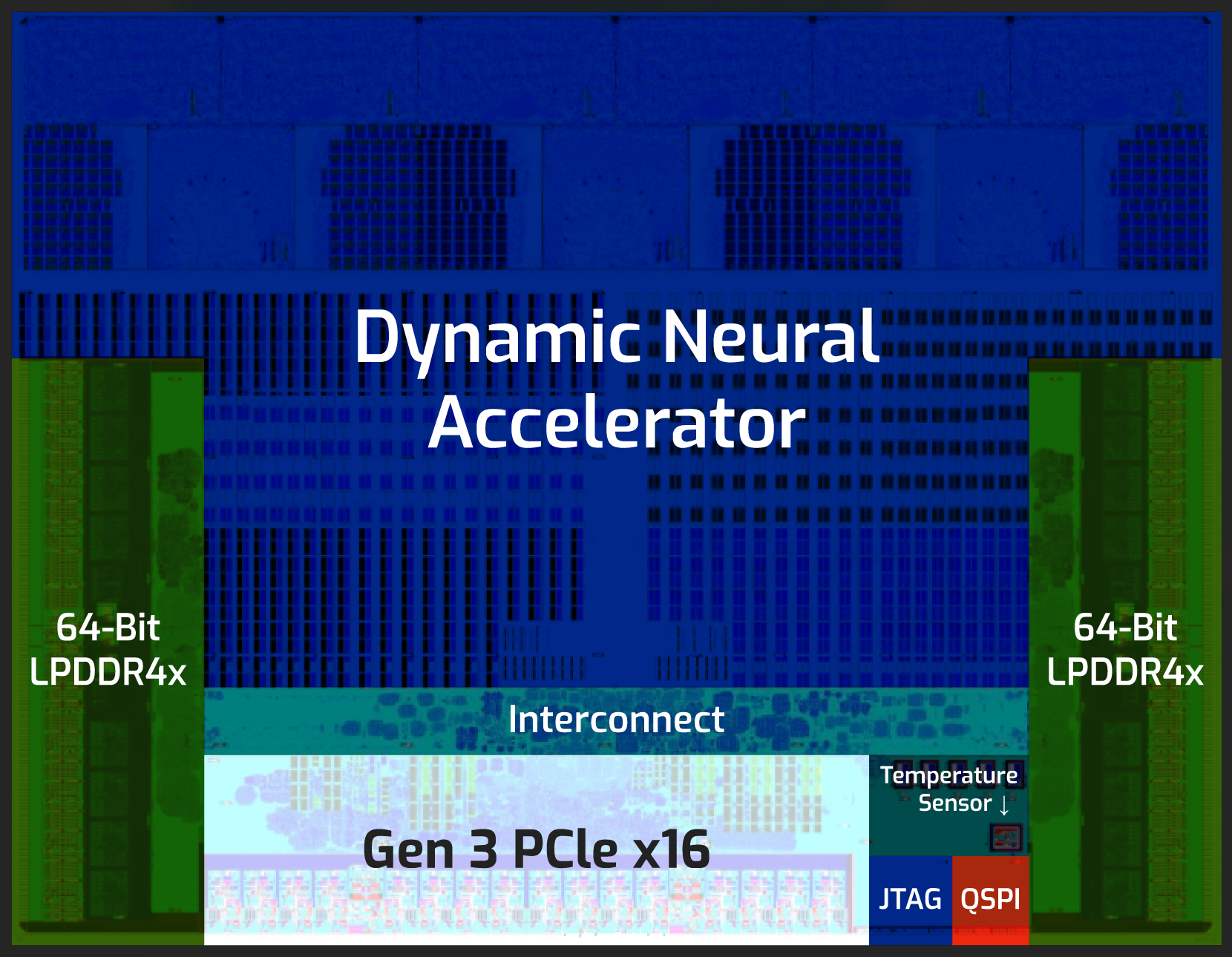

EdgeCortix SAKURA-I is a TSMC 12nm FinFET co-processor (accelerator) delivering class-leading compute efficiency and latency for edge artificial intelligence (AI) inference. It is powered by a 40 trillion operations per second (TOPS), single core Dynamic Neural Accelerator® (DNA) Intellectual Property (IP), which is EdgeCortix’s proprietary neural processing engine with built-in runtime reconfigurable data-path connecting all compute engines together. DNA enables the new SAKURA-I AI co-processor to run multiple deep neural network models together, with ultra-low latency, while preserving exceptional TOPS utilization. This unique attribute is key to enhancing the processing speed, energy-efficiency, and longevity of the system-on-chip, providing exceptional total cost of ownership benefits. The DNA IP is specifically optimized for inference with streaming and high-resolution data.

Automotive

Defense & Security

Robotics & Drones

Smart Cities

Smart Manufacturing

EdgeCortix SAKURA-I AI Co-processor enabled devices are supported by the heterogeneous compiler and software framework – EdgeCortix MERA that can be installed from a public pip repository, enabling seamless compilation and execution of standard or custom convolutional neural networks (CNN) developed in industry-standard frameworks. MERA has built-in integration with Apache TVM, and provides simple API to seamlessly enable deep neural network graph compilation and inference using the DNA AI engine in SAKURA-I. It provides profiling tools, code-generator and runtime needed to deploy any pre-trained deep neural network after a simple calibration and quantization step. MERA supports models to be quantized directly in the deep learning framework, e.g., Pytorch or TensorflowLite.

Fill out the form to get more information on EdgeCortix AI acceleration solutions.

"*" indicates required fields

PCIe FPGA Card 520N-MX Stratix 10 FPGA Board with 16GB HBM2 Powerful solution for accelerating memory-bound applications Need a Price Quote? Jump to Pricing Form

Meet the Powerful IA-840f: Enterprise-Class Intel Agilex Based FPGA Accelerator > Flexible, Customizable Hardware > oneAPI Software Support Buy at Mouser Tap Into the Power

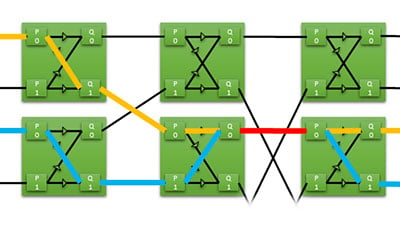

Efficient Sharing of FPGA Resources in oneAPI Building a Butterfly Crossbar Switch to Solve Resource Sharing in FPGAs The Shared Resource Problem FPGA cards usually

BittWare Webinar Arkville PCIe Gen4 Data Mover Using Intel® Agilex™ FPGAs Webinar The Arkville IP from Atomic Rules was recently updated to support Intel Agilex