BittWare Partner IP

Query Processing Unit (QPU)

Build FPGA-powered accelerators to query, analyze or reformat stored or streaming data at PCIe Gen4 speeds!

Eideticom’s Query Processing Unit (QPU) targets database users or anyone streaming data (such as network packets) who need query, analytics or format conversion done in hardware with low latency. Tasks can be parallelized to meet any bandwidth needs, plus mixed with other NoLoad® functions like compression.

Built on the NoLoad® Framework

As part of Eideticom’s NoLoad computational storage framework, the QPU can be pipelined with other Computational Storage IP from Eideticom such as Compression, Decompression Erasure Coding and Deduplication.

Software-Driven Design

Relieving the burden of hardware engineers being involved in specifying the QPU parameters, users define the functions using an on-chip processor. This gives both high-level tool ease-of-use and offload from the main host CPU.

The QPU includes features for format conversion (text to/from binary) or standards-driven database functions. Users design their mix of functions in software—no hardware-based tools are necessary. The QPU will support native acceleration for a range of database tools as these packages move to support standards-based computational storage.

Query

Perform data queries with software-defined search and filter parameters.

Analyze

Filter, pattern-match, and analytics for streaming data such network packet headers or data on SSD storage.

Reformat

Efficient reformatting of text/binary data or other format conversions.

Demo Video

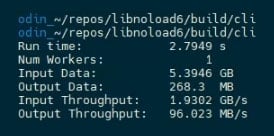

Eideticom's VP Engineering Sean Gibb demonstrates the Query Processing Unit on a 5GB CSV file

This is Sean Gibb, vice-president of engineering at Eideticom. In this video, we will demonstrate the use of Eideticom’s Query Processing Unit, or QPU, for formatting and filtering stock-ticker data stored in a comma-separated text format.

The embedded processors in Eideticom’s QPU are software programmable using C or C++, allowing you to dynamically program your filtering functions.

In addition to the embedded processors, easy to use, high-throughput, hardware co-processors (that perform common tasks like packet capture analysis, conversion from text to binary formats, and simple filtering) are available to your embedded software to accelerate your query workloads.

In this example, we use the text-to-binary formatter to convert CSV to binary data, perform a runtime-configurable hardware filter (to filter out specific stock symbols and low-volume trades), and then perform a software filter to remove all trades where the day closes lower than it opens.

We compile the software using a GCC compiler to produce an executable that we can load through our software stack into the embedded processors. Once the software’s loaded, we run 5GB of CSV data through the Query Engine, filtering for all Microsoft stocks with a volume that exceeds 10 million.

You can see here that a single Query Engine is capable of sustaining 2GB/s of text input. We can tile down multiple Query Engines and, thanks to Eideticom’s software stack, saturate the PCIe interface to the FPGA card with the same host software.

This is just one example of what Eideticom’s software-programmable, hardware-accelerated QPU can do for you.

Software Defined + Scalable for Your Bandwidth Requirements

The Query Processing Unit is defined in software that runs on the FPGA (using soft or hard processor), eliminating the need for low-level configuration engineering resources.

The QPU is modular, allowing for one or more units to be placed to meet a certain bandwidth requirements. QPU instances can cooperate, for example with a distributed file conversion over eight QPUs where data spanning two units needs to be coordinated.

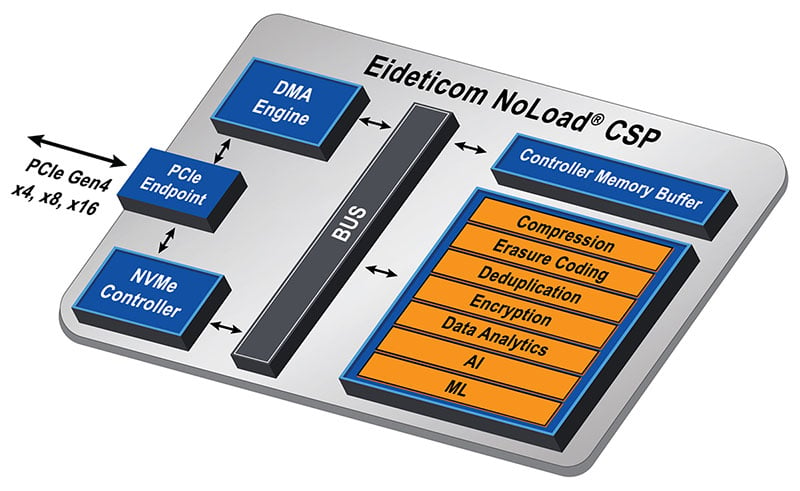

How QPU Works Inside of NoLoad®, Deployed on BittWare’s IA-220-U2 Module or IA-420F Card

Built on the NoLoad® Computational Storage Framework from Eideticom

The Query Processing Unit is a component of the NoLoad framework. The components such as Compression in orange are where users build their particular application using a software-defined approach.

Components like Compression can be added to the QPU to, for example, compress filtered data before moving to SSD storage.

All the accelerator functions shown are implemented in FPGA hardware, allowing for high bandwidth, low latency and CPU offload.

Use Case

Capture + Analytics Engine for Fintech

Building a High-performance, Software-defined Packet Capture and Analytics Engine

This real-world example uses the Query Processing Unit as a packet processing machine, plus the Compression Engine (another NoLoad® IP core). Packets are compressed and written to an SSD array using peer-to-peer transfers, while the QPU also pulls off header (network tuple) data plus some analytics such as packet count per time period. Analytics are sent to the host as CSV data.

Performance Advantage

Compared to a multi-threaded Intel Xeon CPU, the database Query Processing Unit performs as shown below.

| Avg. Packet Size | CPU | 1× Query Engine (QE) | 2× QE | 4× QE |

|---|---|---|---|---|

| 256B | 0.2 GB/s | 1.8 GB/s | 3.6 GB/s | 7.2 GB/s |

| 1024B | 0.7 GB/s | 2.0 GB/s | 4.0 GB/s | 8.0 GB/s |

| 4096B | 1.9 GB/s | 2.0 GB/s | 4.0 GB/s | 8.0 GB/s |

| 9216B | 2.7 GB/s | 2.0 GB/s | 4.0 GB/s | 8.0 GB/s |

Use Case

Database Query Acceleration

Accelerate Database Queries and More

In a database acceleration configuration, the Query Processing Unit can perform a range of functions from CPU offload to data type format conversions.

- Implements CSV/JSON/Parquet parsing and query execution

- 70-80% Improved CPU Offload with 5-10× Increased Performance

- Deployment flexibility in compute, storage and cloud

- NVMe driver achieves low latency high throughput data transfers

- NoLoad Query Processing Unit can be paired with NoLoad compression and decompression engines

Compatible FPGA Cards

The Query Processing Unit targets BittWare’s cards with Intel Agilex FPGAs.

Interested in Pricing or More Information?

Our technical sales team is ready to provide availability and configuration information, or answer your technical questions.

"*" indicates required fields