솔루션

Broadcast Video

Hardware-acceleration for composable 2110 IP video solutions

Flexible Broadcast Solutions

FPGAs have been used in broadcast video for decades, but with innovative companies like manifold technologies, these devices are being used to build on-demand software-orientated solutions. Flexibility for the user goes up, while using up a much smaller footprint—think of a 1U TeraBox server replacing a whole rack full of CPU-based gear and you’ll get the picture.

Need custom solutions? We are able to create a range of options from simple add-on cards to full custom designs. Visit our customization page for more details.

Our FPGA accelerator cards are also great candidates for modern IP-switched video services. With standards like 2110, moving audio and video over the high-speed I/O ports on our cards is easy.

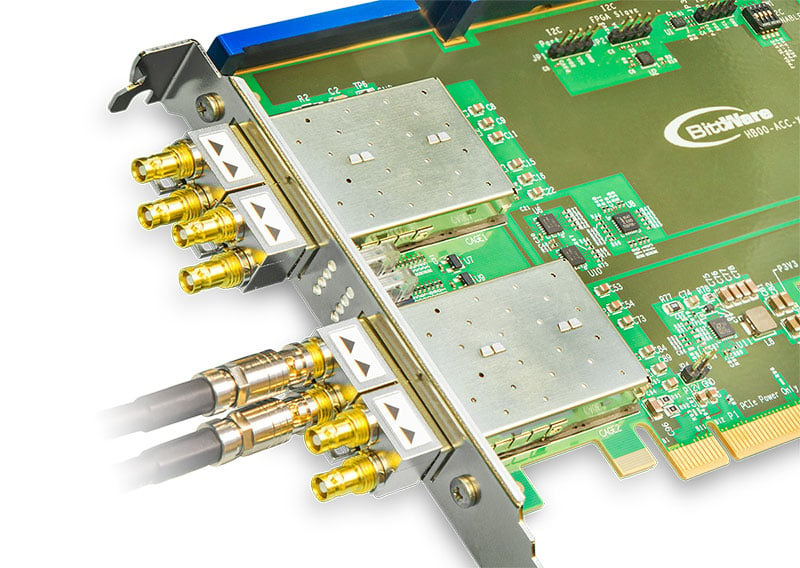

This is the way manifold uses the Altera Agilex 7-equipped BittWare cards: bringing in video over the QSFP ports and out as well. In the case of the IA-860m (pictured next to the TeraBox 1U/4-card server), they also make use of on-package HBM memory to provide a composable pipeline for nearly limitless A/V configuations. Watch the video below to learn more about how manifold technologies is providing a new class of broadcast multiviewer solution.

비디오

Transcript

(Marcus)

Hello this is Marcus with BittWare, and I’m excited to speak with Erling from manifold technologies.

This company provides broadcast live production infrastructure, but instead of using proprietary hardware, their manifold CLOUD software uses COTS, including options for BittWare FPGA cards and our TeraBox™ servers.

So, Erling, let’s start with a quick background of your company.

(Erling)

manifold—as a company—we’re based outside Frankfurt, Germany. The history of the people inside the company goes back two-three decades now—basically working on professional broadcast technology throughout all the time, and it’s changed a lot over the last 20-30 years as you can imagine. Back then it was analog. Then there was a shift to digital, moving then on to IP technology and now we’re—with manifold—we’re doing the next step.

Okay so now let’s talk about manifold CLOUD, which is described as a software suite for live production, and that’s separated from the hardware itself which is commercial off the shelf, or COTS. Can you describe how using COTS is different from what broadcast customers might be used to?

(Erling)

manifold CLOUD is a new product and is really the evolution of 20-30 years of broadcast product technology. So, for a long, long time, broadcast products, which we have developed, have been really industry specific. And the reason and the rationale behind this has been the…really the proprietary formats that our industry has been using—it started up with analog video moving into digitized or digital video with SDI.

Now, over the last just decade or so, the IP and the IT industry were finally at…running at the bandwidths and the speed that our industry demanded, which was at least one-and-a-half gigabits-per-second line rates. And this was not possible to do with IP technology. And then, with a change in the standards—which has meant a move from proprietary interfaces for video over to IP transport for video—this has allowed us now to start making full use of the IT technology.

And in particular, this is allowing us to make use of high-speed IP and IT accelerators, such as products from yourselves, from BittWare, with FPGA accelerators. And this, in turn, means that we’re now making software-only products. We can now make software that runs inside acceleration cards that we get from the open market that not only support our industry but support many other industries like finance and telecommunications, etc. So, we can make use of the…leverage these much bigger industries and make use of the advancements of this technology even for our smaller industry.

(Marcus)

Okay so you’ve essentially moved to focus on software that runs on a number of possible hardware accelerators, and those aren’t even specific to manifold?

(Erling)

Yeah, so this is one of the main benefits of manifold CLOUD, and something that is very, very different from what we’ve been doing for decades.

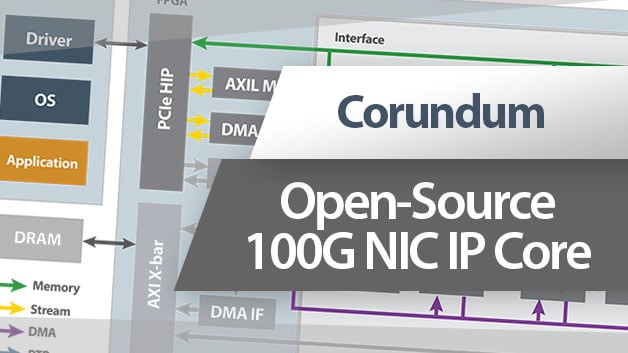

What we do with manifold CLOUD is that we support programmable acceleration cards or PACs, and these are basically FPGAs that sit on PCI Express cards and are available from different manufacturers.

The great thing about manifold is that you now have the ability to buy software that performs the broadcast-specific services that our customers are used to. Very importantly, with the performance that our customers are used to, which means, you know, millisecond latency, super-high bandwidth performance, etc., but with support from different off-the-shelf manufacturers that they can get from all over the world, really.

(Marcus)

Okay got it—hardware options that are COTS gives your customers the performance they expect as directed by the manifold CLOUD software. But I’ve been reading it’s more than just, say, switching to BittWare cards instead of building your own—because even if you switched to a BittWare card that BittWare card could be fixed down to certain input/output configs like the old way of proprietary hardware.

So instead, from what I understand, you talk about this on-demand configurable pool of resources—can you talk me though that?

00;15;18;11

(Erling)

So yeah that’s one of the other major architectural changes that we’ve done with manifold CLOUD.

One change is that we now support a multitude of off-the-shelf acceleration cards. But the second change we’ve done from an architecture perspective is we’re moving into what we call a service-based architecture.

What happens is that when the operator—the user—asks for a service, manifold will place that service on an available hardware somewhere in this cluster—in this cloud—and then provide that service back to you.

So, the beauty with this is that you’re moving to COTS. You’re moving to a cloud/service-based architecture so you can start creating things on demand.

This is something that the IT guys have been used to for well over a decade now with virtualization and public cloud being very popular.

But in the broadcast industry this is really revolutionary! In the broadcast industry, for years and years and years, an application has basically meant that you have to buy an appliance—you have to buy hardware that does this particular function, and it will always do that. And if you need it for five minutes or five years it doesn’t matter because you basically have to buy it and then put into your racks.

This is now completely different with manifold which allows you to use compute, and then a service-based architecture where you license per service. You can use it for one minute, you can use it for two years whatever you need. Turn up, turn down—and when the capacity isn’t needed for that particular service or program it can be repurposed for something else.

This is the architecture of manifold CLOUD.

(Marcus)

Okay so you’ve got COTS hardware running this manifold CLOUD service-based architecture, letting you spin up and down video services, just like we’re used to in today’s cloud service providers for other things.

However, there’s something we haven’t talked about yet, and I wanted to ask you about that, and that’s really the more nuts and bolts of performance and density. Now for manifold, that comes from tapping into FPGAs, and specifically Altera FPGAs on the BittWare cards, and also an innovative way of using high-bandwidth memory or HBM.

So, without going too deep, how have you basically harnessed the power of BittWare’s cards to switch high-bandwidth uncompressed 4K video (for example) for these tier-1 broadcast customers?

(Erling)

Two things that we want to achieve by moving to COTS and, in particular, COTS FPGA with high-bandwidth memory.

So, let me first address the move to COTS and why we move to COTS. I think we moved to COTS for the same reason a lot of things move to COTS: it’s really moving to economies of scale.

So, for us (and I think for all of our clients) it’s, of course, been interesting to be able to get things cheaper (laughter) to be honest, okay? At least cheaper “per bit” so to speak. But also, for us, it’s very important to leverage the economics of scale when it comes to the development speed of companies such as yourself.

So, moving to COTS FPGA and programmable acceleration cards: it gives us two things.

It gives us the ability to retain the performance and basically the quality that our customers have today and that they continue to expect to have—but to leverage these R&D developments (the speed, the performance) of off-the-shelf FPGA acceleration where you’re already talking about speeds that are 10 times really that we’re able to do ourselves if we had to do our own hardware development.

So that’s part one.

Part one, again, the price, performance, and really speed of development that we get by moving to acceleration cards from vendors such as yourself. And number two is how we’re very specifically working with high-bandwidth memory, okay?

High-bandwidth memory is basically a large chunk of memory that’s very fast and sits very close to the FPGA. If you compare this to CPU design (and you know a little bit about CPU design) think of this as a very large, like, a layer one/layer two cache that sits right next to your CPU and basically caches and buffers the instruction set. We use this in a very particular way.

So, first of all, nowadays (and for the last decade or so) professional broadcast video has moved to IP— and this is all IP packets now. This is also some legacy interfaces and stuff, but really—the main processing part—nowadays everything is moving to IP.

So, when packets arrive into the network interface card on one of these accelerators, what manifold is going to do is it’s going to parse these packets, it’s going to look at them and say, “What are you? Are you a video stream? Are you an audio stream? Are you a metadata stream? What are you?” Then, after it finds out what it is, “This is a 1080p60…this is a 32-channel audio stream,” it’s going to write this data straight into memory, and we’re able to do this at line rate speeds.

So, if we have 400 gigabits of IP traffic coming into us—all sorts of video, audio, all stuff—we can write all of that straight into memory.

And secondly, instead of traditional broadcast product designs—which is basically that you build pipelines inside your FPGA for video, audio, and data processing—instead, what we’re doing is we build, for example, one color corrector, we build one encoder, we build one audio shuffler.

And then what manifold does…if the manifold user asks for an encoder service or a color corrector service, that service gets placed on this card, these data gets sent into this card, gets written into memory, and then what we do is we basically multiplex this service, we basically oversample it across all the data inside the memory space which needs to have this service done.

So, this means you’re no longer limited by the amount of paths that you build—because you build only one of each—you’re only limited by the raw bandwidth into the system.

So, this in turn means that we can already, from day one, support things like 16K video.

This is a huge change for products in this space, and it basically, when you start doing calculations—so “How much can this product perform?”—it’s a simple question of, well, “How much bandwidth does it have?” It has 400 gigabits. Well, if you run 3 gig services, that means you’re doing 128 of them. “But actually…I’m running 1.5 gig services,” well, then you do double. “But I run a mix of it at…” whatever, doesn’t matter, you can mix and match anything you want.

It’s basically the bandwidth usage of the raw source compared to the bandwidth capacity of the acceleration card that determines the performance of the card. And this is a big change compared to everything else that’s out there on the market at the moment.

(Marcus)

Okay so it sounds like you’re saying…if we could see the utilization of basically a 1U rack of cards—say four cards—if we could see inside what’s going on…instead of some fixed limit per card in/out, it’s really just this large pool of bandwidth that always gets filled in without gaps, even when the pieces might not neatly fit in some older definition of a round number of ports like 4 or 8 or 12? Basically, it just fills in as much as you need—no gaps?

(Erling)

First of all, you’re correct, but secondly, I’ll give you a little bit of an explanation here. Our industry is so used—because they have been told this for decades—that you buy a card, you will do 10 inputs or 12 inputs or something, but you buy this card. So, they’re so used to always maximizing the usage of this product you just bought.

And then when it comes into space—and people look into quotes from different manufacturers—it really comes down to, like, “What’s the price per port? How much am I paying here for each of these ports?” But with this new technology and with this new pricing—which is now service-based where you pay for what you use only, and only when you need it—this throws all the old calculations out the window.

Your existing cards is already in the vicinity of 10 times the performance that we were doing ourselves in FPGA not that many years ago. So, when I talk to clients now, it’s not that… I have to tell them, “Hey, forget about trying to maximize the performance of this card, like, you’re not even going to reach it! Two or three of these cards is going to replace a rack of equipment that you currently have.” But that doesn’t matter because you should pay for what you use, not for…to squeeze the most out of the hardware.

If you look at sports, if you look at news, like things like elections, they have huge demands for a short amount of time. And in the past, this meant buying or renting huge amounts of equipment. Now, because the performance of these acceleration cards is so large, they are likely to be able to spin up their additional services without changing their infrastructure or maybe with very little additional hardware, if needed, to spin it up and then just take it down again when they don’t need it. And this is a big change.

(Marcus)

It’s amazing to see how you’re really maximizing not only Altera FPGA power, but that HBM memory that’s on the same package that gives so much more flexibility than even simply using an FPGA with those fixed pipelines.

(Erling)

Yep, yep.

(Marcus)

So, let’s get back now to the software side, although it should be noted you’re not using CPUs in all these systems to handle the video itself—it’s really hardware acceleration, those FPGAs, taking off the load, but with the flexibility of software.

Let’s look a little bit more into this—the software side. So, you talk about an API-first approach for example. What are some of the things that manifold CLOUD does that’s unique here?

(Erling)

Sure. The API and the user interface is also very different in manifold then everything that we’ve done before and—we think—from a lot of other vendors as well.

This is another part of the service-based architecture we’ve moved to, and a lot of this stuff is that we basically learn from the best. What is very common and very popular right now is public cloud solutions, right? It’s the ability to…you log into your AWS or your Google Cloud account from your single sign-on user interface and you…basically create what you want.

manifold CLOUD is built around the same architecture. There’s a single sign-on web user interface that then administrates the entire cluster. All the underlying hardware that services manifold CLOUD (this particular cluster) that then runs the software on top of it, which is then pointed to this single sign-on web user interface.

So, we have one API. It’s a stable API with an API-first focus. We think of this API as a product really, where you can interface to, you can request services, you can get statistics information, all of that stuff.

As the product evolves, new API calls become available—but the old ones are not deprecated—so you can kind-of build towards this infrastructure with confidence that it’s not just going to break as the next software is released. We think this is a good approach. We think other companies have been very successful in developing products with this approach, and we think that by bringing this to the broadcast industry, it’s going to make a big change.

Now, service-based architecture, RESTful API, API-first focus, the API creates the services you want. It’s a nice stable API. You can take things down and bring things up again. And I think this is a big change for our customers.

(Marcus)

Now let me ask you a bit of a different question. So, we’ve been talking about all the capabilities of these spinning up and down services which probably signals in our viewer’s heads, “Oh these are IP-switched video…and is that all they support?” So, they’re asking, you know, “What about my SDI ins and outs?” …those physical ports. Can you just talk me through how you support that.

(Erling)

We have a great partnership with Arkona Technologies, and we’ve really been co-promoting this with them. So, manifold CLOUD as software also runs on the AT300, which is a hardware product by Arkona—part of their Blade Runner platform.

So, you can mix and match. You can put manifold on top of the AT300, which gives you legacy SDI (Serial Digital Interface) connectors in and out the…let’s call it this “cluster.”

What I fully expect when we talk to clients at the moment is really a hybrid mix. So, they have some components, some legacy networking still, which can be served by the AT300, and then they put maybe the core of their compute in acceleration cards. And these two can work together.

So, manifold CLOUD as a product abstracts the hardware from underneath it. So as a user, you just see audio, video, and data services and sources, and you don’t actually see what’s underlying hardware that computes this or is the interface in and out of this ecosystem.

(Marcus)

Okay so that covers the legacy connections such as SDI, but what about, say, down the road with the cards themselves—will future cards be able to work with cards that are purchased today? My assumption is that future cards—they have more capacity per card so you can add to that pool of resources that we talked about—but will those new cards mix seamlessly with today’s cards?

(Erling)

Yeah, that’s correct. So, we use just to get a little bit technical here, but we use one of the features called the Acceleration Functional Unit or AFU. This is really another big driver and benefit for us because we basically develop products…sorry…software that goes… or firmware, really, that goes into these AFUs. You can think of the AFU as kind of like a virtual CPU or at least an abstraction of what sits underneath.

The good thing with the Acceleration Functional Unit concept is the fact that we can go from a three-year development cycle to a three-month development cycle. This makes us…that makes us able to ride on the coattails of you guys and the chipmaker industry. So, when new hardware comes out, we can much faster be able to provide products for it. And then it will work in conjunction with your existing hardware as well, so they can just add more capacity if they need to.

(Marcus)

Okay, great. So now last question, and this is about the future—what do you see with manifold technologies say three to five years out?

(Erling)

I think the next logical step for us is to expand into other services, as I mentioned, moving further into encoding, decoding, maybe recording services. So, we really have the ability to add a lot more services into our ecosystem and let the clients simply add the services that they like and when they want it and don’t use it when they don’t want it, and you know, use as they see fit.

But what I see manifold being in three to five years, basically, is a widening of the product portfolio to do more and different services on top of this hardware architecture.

(Marcus)

Excellent, well thank you for all these answers and for those in the audience who want to know more about manifold CLOUD, where should they go?

(Erling)

Well, ManifoldTech.tv is the website, you can contact us from there.

(Marcus)

Well, I appreciate the technology, all the things that you’ve done up to this point. Look forward to how it grows into the future. So, again, thank you so much for talking with us, Erling.

(Erling)

Thanks so much, Marcus. I really appreciate it.

(Marcus)

Okay so that’s how manifold technologies is using BittWare accelerators, specifically those with Altera FPGAs, for broadcast. If you’re looking for more information on these accelerators, visit our website at BittWare.com, and thanks for watching!

Custom SDI Add-on

Pictured is a custom add-on card for our XUP-P3R board. It features several video SFP ports supporting SDI ins/outs, connected directly to the FPGA.