Powerful AI Inference

BittWare 기반

SC24 Video

Transcript

Hi, my name is Cameron McCaskill, and I run sales for a company called Positron AI. We’re actually here at the Supercomputing event with our partner BittWare, and what I’m going to do first is show you a demo of what we’ve built, and then I’ll explain how we’ve gotten from point A to point B.

What you’re going to see is a demo of two of these cards—two of these AI accelerators—that are produced by BittWare, that have programmed chips inside the card that are specifically focused on AI inference acceleration…and that’s going to be based on a comparison against the H100 card from NVIDIA.

On the left of the screen, you will see two of the H100 cards from NVIDIA running Llama 3.1 8B, which is a very popular model from Meta, and on the right, you’ll see it versus two of our cards from Positron. I’m going to run an industry-standard benchmark, it’s called MMLU Pro, and once I click that button, you’ll see the speed and the power consumption of each of the two solutions.

On the left, you’ll see NVIDIA is running about 140 tokens per second for this model—this Llama 3.1 8B—and it’s about 3 watts per token. On the right, you see the Positron two cards are running at almost double that speed and about a third of the power.

The value proposition of Positron is that we’ve made a much more efficient solution from not just from a performance perspective, but the cost of the server is much less than a DGX platform from NVIDIA, and the power consumption is about a third of what NVIDIA consumes to run AI model inference.

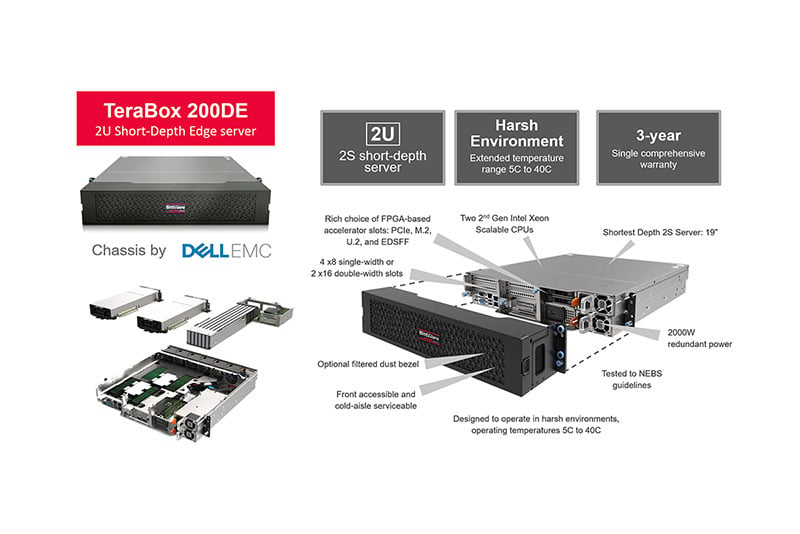

Let me tell you a little bit more about how we’ve done it. The product we’re shipping today is called Atlas. It is an eight-card system, so it has eight of these cards I mentioned before inside the server, and that is comparable to the eight-accelerator-card version of the DGX H100 box from NVIDIA.

This box is now shipping. This is, we think, ultimately the way people will measure the success of their hardware or hardware-software combination for running an AI model is going to all boil down to two things: performance per dollar and performance per watt.

Today, Positron is showing about a 5x improvement in terms of performance per watt versus a DGX H100 and a 4x improvement in terms of performance per dollar over DGX H100 from NVIDIA.

The other thing we wanted to do is make it an appliance. Think of this as a box that you just plug in, and it just works. From a software perspective, we decided not to go down the path that many AI chip startups have done of building their own compilers, and now you have to go and relearn how to use a new software package. We said, “What if we just leverage the transformer library that’s on Hugging Face?” Hugging Face has over a million transformer models uploaded to it today, and so it’s the largest repository of open-source large language models. As long as you’re able to take the .pt or the .safetensors file off of Hugging Face, it’s literally a drag-and-drop experience to the Positron AI hardware. So again, it’s that appliance-like experience.

Another example I will give you as to how we’ve made this a plug-and-play experience: our first customer, from the moment we started opening the box until we were running large language models through that server, was a grand total of 38 minutes. It is truly a plug-and-play experience for our customers.

Another advantage that we like to talk about is all of these data centers out there, particularly ones built before 2020 that I call “AI orphaned,” because they’ve got, as an example…maybe they only have 10 kW available (in terms of power) to each rack.

Well, in that power envelope, you can literally only fit one DGX H100, because when the DGX H100 is running inference, it’s burning about 6,000 watts. So, if you only have a 10 kW rack, you can only fit one of those servers in that footprint.

On the other hand, with our Atlas server, we’re only burning 2,000 watts running AI inference at full speed, so you can actually fit five of our servers in that 10 kW footprint. It’s allowed us to go after some of the data centers that feel today they’re sort of orphaned by AI—they’re not able to participate in this fast-growing business, and we show them a solution that can operate in their 10 kW racks very effectively and very price-effectively as well.

And so just in closing, what we’ve built to date, and again, the company’s been around since April of 2023, we’ve got what we call Positron Test Flight. Really, all that is, is we’ve hosted some of these Atlas servers in our own engineering facility, and then we enable customers to have a dedicated instance of that hardware, and they can test for free—so try before you buy.

The Atlas server you see here in the middle of the slide is the product that we’re shipping today and have been shipping since August. The accelerator cards I’ve shown you a few times, we do have some customers that have said, “Can I just buy cards from you and install them in my server?” The answer is yes, but for the most part today, we’re focused on selling the appliance. But we will sell cards if it’s the right level of volume and it’s not too complex in terms of the number of servers that we have to deploy within.

That’s Positron AI in a nutshell. While we’re already more performant than NVIDIA for popular models like LLaMA 3.1 8B, we can still double our today’s performance just through software on the exact same hardware platform. We’d be excited to talk to you more.

가격이나 자세한 정보가 궁금하신가요?

기술 영업팀에서 가용성 및 구성 정보를 제공하거나 기술 관련 질문에 답변해 드립니다.

"*"는 필수 필드를 나타냅니다.