Marcus Weddle, BittWare

Today I’m speaking with Megh Computing about their AI-driven video analytics solution.

I wanted to begin by noting that some of the statistics that Megh has noted before, there’s going to be a billion new cameras this year, but amazingly, 95% of that video from those cameras will never be analyzed. This is becoming a common story—many new sensors all over the place, but we’re at a loss to effectively do anything with that data, which results in expense in the sensors without a good return on investment in getting analytics out of that data.

So to talk with me about making video analytics easier to access is PK Gupta with Megh computing, welcome!

PK Gupta, Megh Computing

Hi, Marcus—yeah, glad to be here. Thank you.

Marcus

So as I said, we’ve got lots of cameras in the world, lots of sensors everywhere. For example, a factory might have 50 IP cameras connected to recorders, but no way to actually take action on that footage. Can you talk me through how—does this get solved?

PK

Yeah, absolutely. So typically the scenario you described, you know, is very common where people in a small factory or small business have tens of cameras or few cameras connected to an NVR. And the typical usage there is they record the videos and maybe they look at it for the majority of the videos. But they don’t really utilize the video—the information in the video—properly, right?

So what’s missing is the ability to process the video in real time—to extract intelligence from the video and to create actionable insights that add a lot of value to the business. That is what’s missing and that is what we at Megh are providing a solution, a video analytics solution, to address this problem.

Marcus

So within a factory, and may also in other use cases—what are examples of analytics because that word refers to specific actionable outcomes that the user can analyze rather than simply watch the footage?

PK

Yes. So let me give you some examples where some of the use cases in a factory setting. In a factory setting, if we are using intelligent video analytics with AI, some typical use cases that we would support are for worker safety and process improvements. So as an example, for worker safety, we support use cases for PP compliance to make sure workers are wearing hard hats, or boots or helmets—whatever’s required as part of that work environment.

We also have use cases to support collision avoidance for worker safety. And some other use cases for worker safety like avoiding spills or tracking if a worker has fallen. So these are use cases where we can apply to a smart factory for worker safety.

You can also use video analytics to monitor the operational process environment in a factory and suggest improvements for additional efficiency. For example, number of people working in a process line—how efficiently they are working and how they’re utilizing their time in their workspace.

All of that can be used to improve work efficiency—overall process efficiencies in a factory.

Besides factories there are use cases we can support in smart buildings for physical security like loiter detection, intrusion detection. And similarly, there are other use cases that we can support in settings in smart cities.

Marcus

So now let’s focus a bit on what you mean specifically with actionable insights. So I think we’re all familiar with the idea of recording video from a security camera to a hard drive, and maybe doing some very simple movement sensitive, you know, sensitive to certain movements in the frame or something like that. But the Megh VAS suite goes far beyond that—I think we have some footage of it running demo analytics, so if you can talk me thought this as it plays…

PK

Yeah. So analytics is the ability to analyze a video stream and apply some business rules to get some insights on the video that can be used to improve business processes or improve, you know, reduce the of operational risk.

Some of the clips that you’re seeing—we have examples of intrusion detection for a smart building application where, or even in a factory setting, where, if somebody is trying to intrude in we monitor, we identify the person and raise the alarm.

The alarm typically goes out as a notification to an application and the operators can then act on that. So there are examples of intrusion detection. There are examples of loiter detection, where if somebody is loitering outside a building and, for public safety reasons, the operators want to be able to track that and deal with that situation, right, to improve safety of their workers or visitors.

These are specific examples where we use video analytics to take actionable insights in a setting for physical security.

Marcus

My next question is how much customization you do with customers. If you’ve got someone with cameras but little advanced AI capability, can you provide everything else they need or would they need to bring their own models. And then for those customers who do have trained models, looking to do better with TCO, can you customize a solution for them?

PK

Megh provides a fully customizable cross platform distributed solution. What that means is our solution is built on what we call the principles of Open Analytics and the three main principles or pillars of Open Analytics.

The first one is open customization. We allow full customization of the video and this pipeline. We create our own advanced AI libraries for some standard use cases. But we also work with customers who might have their own trained models.

And we bring that, and we provide a mechanism to deploy that very quickly and efficiently on a platform. So that’s all part of customization.

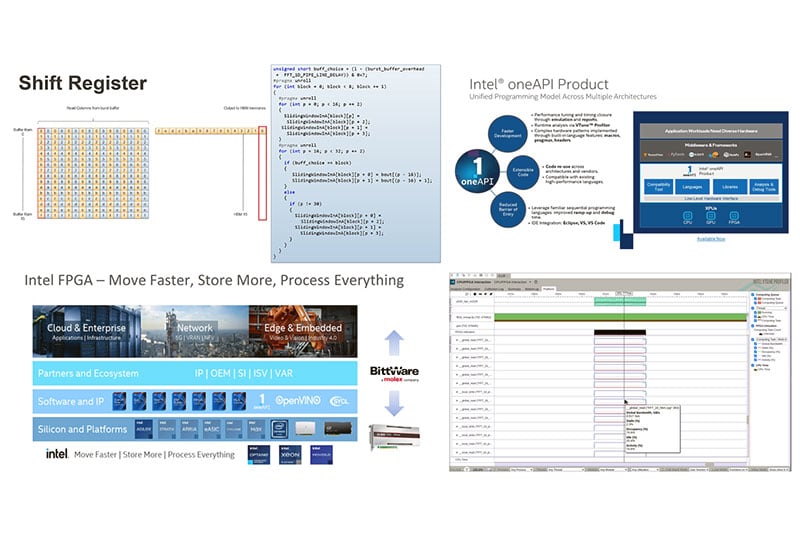

The second pillar is all about open choice, where we allow the customers to choose the optimum platform for the deployment to get to the lowest TCO. For example, we support CPUs, GPUs, FPGAs and even now ASICs for deployment.

The third pillar of Open Analytics is open integration. We can integrate with different kinds of back-end software—provide the complete end to end solution.

So in summary, Megh provides total control to the users to implement their video analytics solution.

Marcus

That’s great—it sounds like you have a solution that fits a pretty wide range of where customers already are. But let’s zoom in on that second pillar, which includes hardware acceleration to get better TCO. This includes the BittWare 520NX, so how does this fit in when you have a customer who needs to scale up?

PK

So we support as part of the second pillar we mentioned open choice, we support CPU, GPU, FPGAs and even ASICs. For a small deployment with a few cameras for a retail establishment or even for a small factory—that they have a handful of cameras—we can deploy them in a small edge server with an ARM processor or even with a few x86 cores. Then we can scale up as people deploy more cameras. We can we can get a GPU in there—go to maybe 10-20 cameras.

But for really high-density applications, say on a campus setting where there are hundreds or thousands of cameras in a very large campus setting. There we need much more processing power, and there typically we deploy our solution on enterprise servers—or one or more servers, each one with one or more FPGA accelerator cards in them

One of the cards we’ve been using recently is the 520NX from BittWare. And that card uses the Intel Stratix 10 NX FPGA. That FPGA has some enhanced DSP engines that accelerate the implementation of deep learning inferencing very efficiently.

We are getting more than 5X the performance for many models on the 520NX compared to the latest GPU implementation. So that allows us to target some very high-density applications using the 520NX.

Marcus

Now I know you’re also finding some use cases where the Intel Agilex FPGA is a good fit as well. BittWare has the IA-420F card which provides high efficiency plus PCIe Gen4—more hardware floating point DSPs but also at lower power consumption. How do you see Agilex helping your customers?

PK

So we are very excited by the new Agilex FPGAs, which a BittWare is going to bring the market soon. We are focusing the 520NX on very high performance deep learning engines as I mentioned earlier. But we look at the Agilex with its varied products. So there’s a lower end product that that will come in a 75-watt power envelope, which can be deployed on small edge servers with a single FPGA card. And the higher end Agilex card that that would be equivalent performance to the 520NX.

So we get a wider range of FPGAs targeting different applications. We also get the benefit of native floating point support, FP32 support on Agilex cards, which then allows us to bring to market newer models more quickly without having to go through a quantization phase.

And also the latest PCIe Gen 4 gives us enough bandwidth and low latency to support to various streaming applications.

The combination of all this will allow us to meet our customer needs. The customer needs are evolving to more and more complex custom use cases. Especially in the military/government/aerospace side. We are seeing increasing demand for applications that cover a wider spectrum of form factors, power usages and also newer models that need to be brought to the market quickly. We believe the Agilex FPGAs will allow us to meet these customer needs.

Marcus

So PK my last question is to elaborate a bit on what we touched on earlier with different levels of customization with VAS—I know you go from a complete off the shelf solution for customers to get going quickly to more of a custom solution model. How does Megh think about these levels?

PK

We have two offerings to meet our customer’s needs. The first offering is what we call the VAS Suite, which is a collection of custom use cases for worker safety, physical security and other examples that we discussed before. These are available out of the box. So when a customer comes to us for these kind of standard use cases, we can deploy, very quickly. We have what we call Level 1 customization where they can configure things using our GUI and get it deployed very quickly.

Then we have something called Level 2 customization where we might have to tweak the pipeline to support a custom model—a custom AI model—or custom analytics. And we typically would do that ourselves—do it very quickly—and within a couple of weeks we can introduce a new model or new analytics and then deploy that for the customer.

And the third level of customization is when that requires more deep engagement with the customer. It might be a very, very special use case or very customized use case or wearing custom AI models. And for that we offer a VAS SDK, where we work with the customer directly and help them build the application, providing all the help they need. So that’s typically used to build custom models which might take a bit longer to bring to market.

So we have these different levels of customization options we offer our customers to help them the varying needs they might bring to us.

Marcus

I’ve been speaking with PK Gupta, co-founder and CEO of Megh computing. As he said, BittWare offers the Megh solution which includes the VAS Suite on our Intel FPGA-based cards including the 520NX, IA-420F and IA-840F.