XUP-VVH PCIe Card with Xilinx Virtex UltraScale+ VU37P FPGA

PCIe FPGA Card XUP-VVH UltraScale+ FPGA PCIe Board with Integrated HBM2 Memory 4x 100GbE Network Ports and VU37P FPGA Need a Price Quote? Jump to

Introduced in 2021, DDR5 SDRAM brings many performance enhancements and will be available on FPGA cards, but just how much faster is it? And how does it compare to other memory types like HBM2e and GDDR6?

Introduced in 2021, DDR5 SDRAM brings many performance enhancements and will be available on FPGA cards, but just how much faster is it? And how does it compare to other memory types like HBM2e and GDDR6?

The simple way of comparing DDR5 versus DDR4 is to say that it’s twice the bandwidth. However, such a broad statement doesn’t capture the real-world variety of factors, including module speeds and number of modules in the system (four DDR4 modules may provide similar performance to two DDR5 DIMMs for example). To better compare these DRAM technologies, we’ll start by looking at the underlying factors giving DDR5 its bandwidth performance at a theoretical maximum level. Then we will see how this translates to performance at a system level on several BittWare FPGA accelerator cards. We will also compare to the higher-end memory types of GRRD6 and HBM to see if they still have a significant edge over standard DDR memory.

Let’s start by looking at how the bandwidth is calculated at a DIMM level. Across the five generations of DDR, module performance is usually stated either in MT/s (mega transfers per second) or GB/s (gigabytes per second). The clock rate is half of the data transfer rate, which is where the “double data rate” comes from in the DDR acronym. This ratio hasn’t changed with DDR5.

What has changed is a significant increase in the transfer speeds (MT/s or GB/s) available. Today’s DDR4 has reached speeds of 3,200MT/s (@ 1.6GHz clock rate) for ECC modules; modules are usually stated with the specific module speed, so this would be DDR4-3200. But for DDR5, there is already available a DDR5-5600 option suitable for FPGA-attached SDRAM—that’s 5,600 MT/s (@ 2.8 GHz).

This speed advantage will further grow over time with the DDR5 specification supporting up to 8,400 MT/s (@ 4.2 GHz)! But for today, our comparison uses the DDR5-5600 modules that would be targeted for FPGA cards.

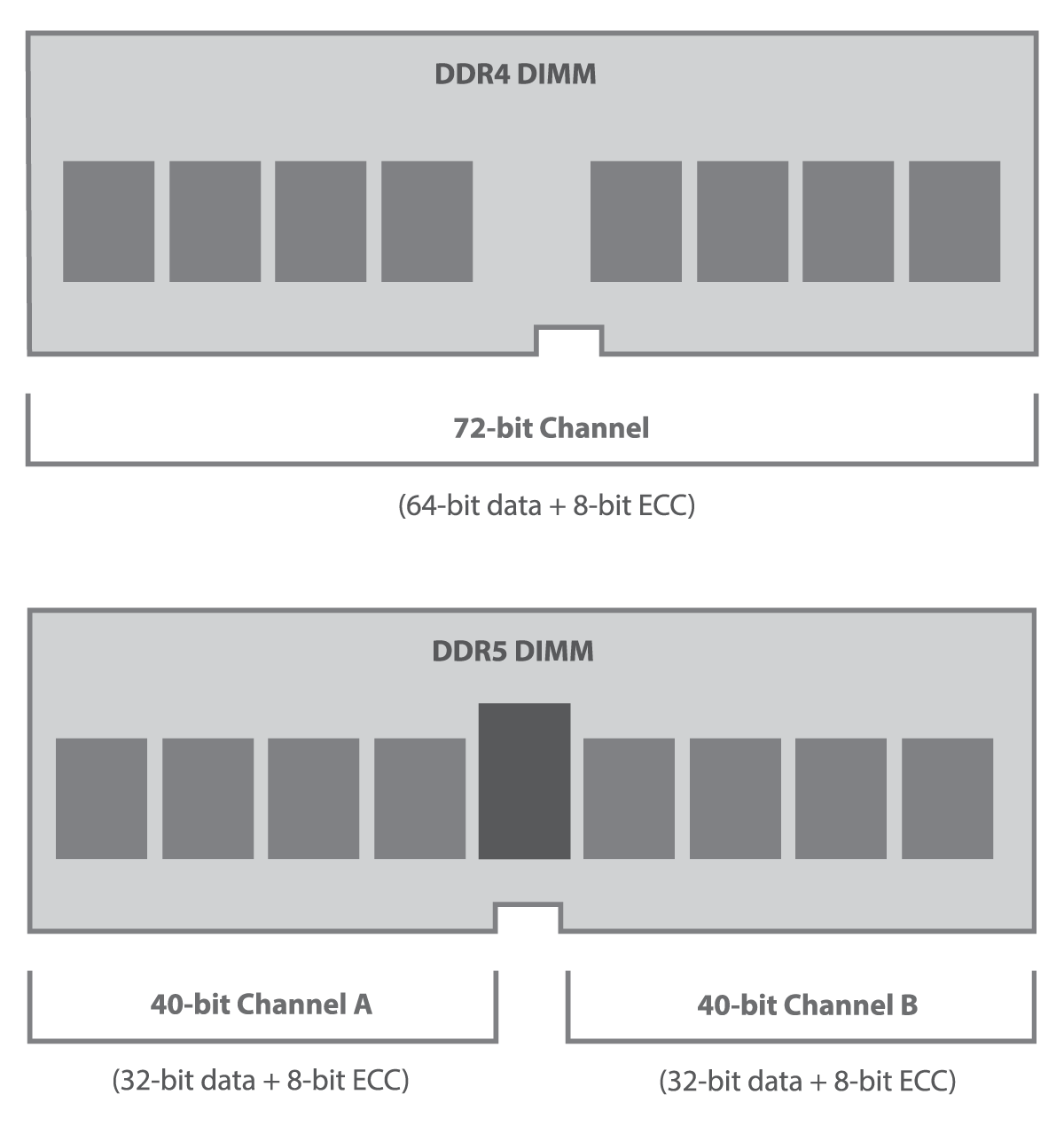

We noted the “D” in DDR means the clock is half the data rate—this hasn’t changed with DDR5. There is a notable change in the number of channels per module as DDR5 DIMMs are now dual-channel. Those channels have smaller bus widths that generally negate the raw bandwidth advantage you might expect here. Specifically, for DDR5 each module has two 40-bit channels, with 8 of those bits for ECC. So on a module level, that’s 80 bits total, compared to DDR4’s single channel of 72 bits (including 8-bits for ECC).

Have a look at the diagram to see it laid out on the module level:

So, with 80 bits versus 72, DDR5 does have a slight advantage if the user is turning those ECC bits into “regular” data bits. If you’re not using ECC for regular data, then the two are essentially the same: 64 bits single channel or 64 bits spread over two channels.

For our comparisons below, we assumed the use of the larger bits for channels, giving a slight edge to DDR5 (80 bits versus 72 at the module level). We should note there’s a further advantage of having two channels on the DDR5 side as there’s more efficient access to the memory, which can favor latency.

Though we aren’t covering them in this article, there are other DDR5 advantages beyond bandwidth. For power, the DDR5 DIMMs have a power management integrated circuit on the DIMM module, instead of requiring power management to be implemented on the card. And DDR5 requires lower voltage than DDR4 (1.1V vs. 1.2V), which helps with the increased power needed to run at a higher speed.

Some important networking applications require doing a table lookup and update for every single packet passing through the FPGA. For small tables, a programmer can use the low latency, static memory found inside the FPGA. However, when table size overflows the FPGA’s capacity, programmers need to leverage the FPGA’s external memory. This can pose a significant performance challenge. It also presents an opportunity for a card vendor like BittWare to differentiate itself in the market. An example of this is BittWare’s unique support of QDR-II+ static, external memory in many of our AMD FPGA-based products. This feature landed us several key design wins for customers looking to process packets at about 10 GbE packet rates.

However, as packet rates go up, both access rates and table sizes go up. The solution for today’s 100+ GbE rates is to offer dynamic memory with many, many channels. We need a lot more channels than the simple doubling in channel count between DDR4 and DDR5. This is why many of our latest FPGA card offerings provide either GDDR6 or HBM2e memory.

All of the dynamic memory technologies, DDR4/5, GDDR, and HBM offer roughly the same core access latency. Increasing the channel count reduces latency from queuing delay. Lots of channels also allows crafting table lookup and update algorithms to choreograph the parallel memory accesses. This can create deterministic table lookup latencies at high access rates using a deep pipeline.

Now let’s move from the module level to where it really matters: the system level (or card level in the case of accelerators with memory). It’s as important to consider things like number of banks (DIMM slots or groups of discreet soldered memory) and supported speeds can have a significant impact on performance.

For our comparison we chose three FPGA boards with a total of four configurations (one card we have two configurations to compare DDR4-2400 to DDR4-3200). It’s relatively simple to get the bandwidth, but we feel that presenting system bandwidth, even at this theoretical maximum figure, can give good insights to what’s the best option for your application.

| Card | Memory Type(s) | Total Channels + Width | Total Memory | Module Speed | Clock | Total Bandwidth 1 |

|---|---|---|---|---|---|---|

| 520N-MX | 2x DIMMs of DDR4-2400 | 2x/72bits | 32 GB (2x 16 GB) | 2,400 MT/s 2.4 GB/s | 1.2 GHz | 19.2 GB/s |

| IA-840f | 2x DIMMS DDR4-2400 + 2x discrete DDR4-2400 | 4x/72bits | 128 GB (4x 32 GB) | 2,400 MT/s 2.4 GB/s | 1.2 GHz | 38.4 GB/s |

| IA-840f | 2x DIMMS of DDR4-3200 + 2x discrete DDR4-3200 | 4x/72bits | 64GB (4x 16 GB) | 3,200 MT/s 3.2 GB/s | 1.6 GHz | 51.2 GB/s |

| FPGA Card | 2x DIMMs of DDR5-5600 | 4x/40 bits | 128 GB (2x 64 GB) | 5,600 MT/s 5.6 GB/s | 2.8 GHz | 44.8 GB/s |

For the 520N-MX, which is on the low end for bandwidth, the two DDR4-2400 modules provide 19.2 GB/s total theoretical bandwidth. But jump down to the last line, an FPGA card with only two DDR5 DIMMs gives over double the bandwidth: 44.8 GB/s! This is on a card that supports DDR5-5600 modules. So for equal number of DIMMs, DDR5 can indeed give twice the performance per module than DDR4.

However, look now at the middle two lines on the chart (IA-840f card) where we show two configurations. One has DDR4-2400 (just like the 520N-MX) and the other DDR4-3200. Both configurations include two DIMMs plus two soldered discreet banks, which are configured similar to DIMMs, giving a board-level rough equivalent of four DIMMs.

The result? While the 2,400 MT/s memory is still slower than DDR4, moving to 3,200 MT/s actually provides 51.2 Gb/s, slightly higher bandwidth than DDR5. Of course, that’s using today’s DDR5 speeds, which will eventually be eclipsed by even higher-bandwidth modules. Plus, DDR5 being so much faster and supporting larger capacity per module, you save on the physical space (both mechanically and thermal airflow-wise) that can be a design factor for a PCIe card with larger FPGAs.

As you can see, while DDR5 has a significant (and growing as faster speeds become available) advantage over DDR4, there remains an order of magnitude difference when compared to GDDR6, HBM, and HBM2e.

Ultra-high speed HBM2e (an update of HBM2) is implemented in-package with the FPGA. With two 16GB stacks of memory on the IA-860m card’s Intel Agilex 7 M-series FPGA (32GB total), the combined peak bandwidth is up to 820GBps. That’s up to 18x more than an FPGA card with DDR5!

Although it’s not quite as fast as HBM2e, GDDR6 and HBM still outperform DDR5 by 10x. GDDR6 supports two independent 16-bit channels per bank, so a card with 8 banks of GDDR6 (such as BittWare’s S7t-VG6 with an Achronix FPGA) has up to 448GB/s of bandwidth. Our XUP-VVH gets is HBM memory thanks to the AMD Virtex UltraScale+ VU-37P device.

Perhaps what’s most helpful in conclusion is to note the variety of options available from the BittWare portfolio of FPGA cards. DDR5 is an improvement and will be an excellent new way to get large, fast memory. But don’t rule out DDR4 just yet–it’s a more mature technology, widely available, and can still hold its own compared to DDR5. For really memory-intensive applications, look to ultra high-speed options like HBM2e and GDDR6.

PCIe FPGA Card XUP-VVH UltraScale+ FPGA PCIe Board with Integrated HBM2 Memory 4x 100GbE Network Ports and VU37P FPGA Need a Price Quote? Jump to

Explore using oneAPI with our 2D FFT demo on the 520N-MX card featuring HBM2. Be sure to request the code download at the bottom of the page!

FPGA Server TeraBox 2102D 2U Server for FPGA Cards Legacy Product Notice: This is a legacy product and is not recommended for new designs. It

BittWare Webinar High Performance Computing with Next-Generation Intel® Agilex™ FPGAs Featuring an Example Application from Barcelona Supercomputing Center Now Available On Demand (Included is recorded