Homomorphic Encryption Acceleration

Article Homomorphic Encryption Acceleration FPGA acceleration enables this unique solution that allows compute on encrypted data without decrypting or sharing keys Traditional Encryption Limits Encrypting

FPGAs with HBM2 memory are faster than GPU chips when algorithms include “corner turns”. This is because the FPGA can overlap the corner turn operation with computation, making it “free” from a latency perspective. We demonstrate the technique using a 2D FFT and provide the source code below. To keep things “fair”, that code uses a data type which GPUs also support. FPGAs can easily process any data type.

BittWare previously created a 2D FFT kernel for FPGAs using Intel’s OpenCL compiler. We have now rewritten that code to leverage Intel’s oneAPI programming model, specifically its DPC++ programming language. We achieved the similar performance on our 520N-MX card. Intel is promoting oneAPI as the best toolchain for applications that require GPU or FPGA acceleration.

The 2D FFT is often found in FPGA IP libraries and thus is not something programmers generally implement themselves. However, a common implementation strategy for 2D FFT on parallel hardware involves a “corner turn” or “data transposition” step that poses a major performance bottleneck on CPUs and GPUs.

The insight we highlight in this demonstration is that an FPGA implementation can perform data transposition in parallel with computation, making it almost “free” from a latency perspective.

A GPU cannot do the same because GPU architectures do not have enough memory inside the GPU to pipeline intermediate results without touching HBM2/GDDR6 memory.

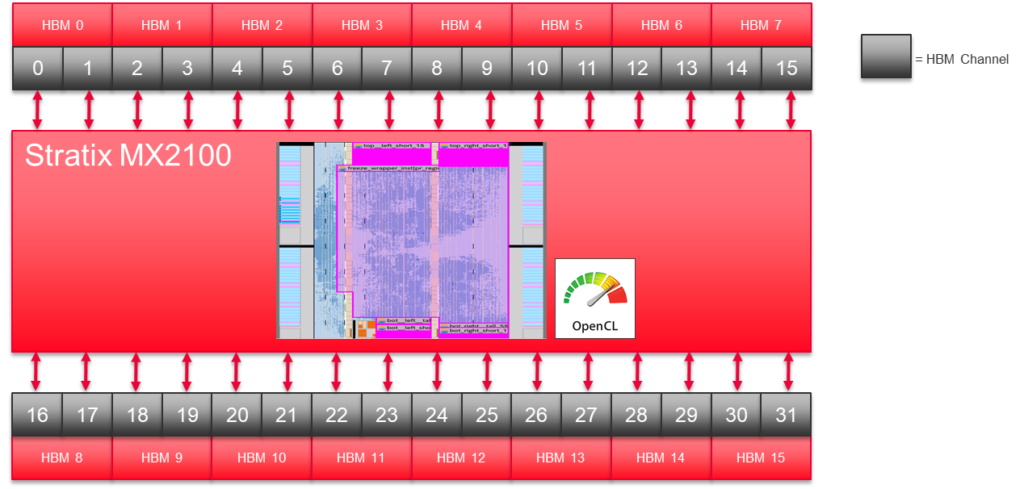

The Stratix 10 MX has 32 pseudo HBM2 memory channels. Our 2D FFT implementation uses half those channels.

The Stratix 10 MX has 32 pseudo HBM2 memory channels. Our 2D FFT implementation uses half those channels.

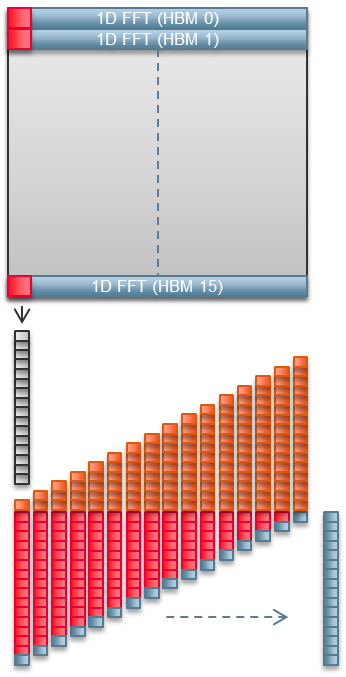

We don’t start the 16 1D FFTs on the same clock cycle. Instead we stagger their starting points by one complex element each. Doing this allows us to pipeline the 1D FFT results into the corner turn logic.

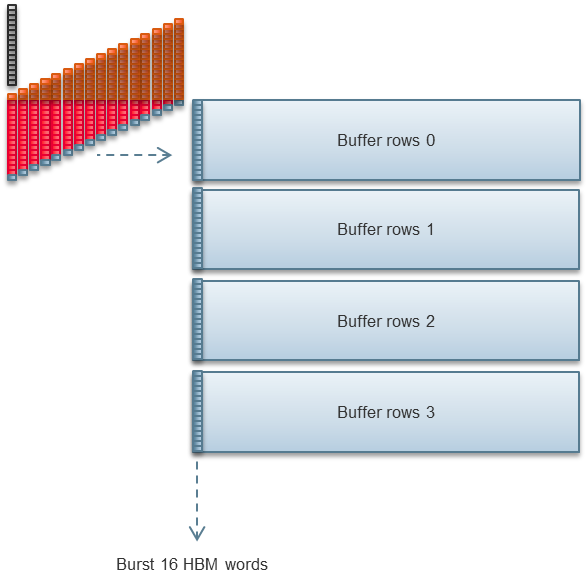

We need to wait for the output of four 1D FFTs in order to be able to write the transposed data to HBM in a 32-byte chunk.

BittWare’s development team uses RTL (Verilog and VHDL), HLS (mostly C++), OpenCL, and now DPC++. We previously published a white paper comparing RTL with HLS. This project was our first opportunity to compare OpenCL with DPC++.

We are pleased to report that moving from OpenCL code for FPGA to DPC++ for FPGA was easy. The adaptations specific to FPGAs and the HBM2 on-package memory were very similar to the OpenCL version. Data movement on and off the host computer was simple. We even called our 2D FFT kernel from NumPy which we never attempted using the OpenCL version.

We are using 512 M20K memories (12.5% of an MX2100).

The length of FFTs implemented for FPGAs is typically set at bitstream compile time.

We implemented our own 1D FFT (as opposed to using somebody’s FPGA IP library). The 1D FFT is radix 2 and fully pipelined. It is not in-place.

2D FFT performance will always be limited by the HBM2 bandwidth. Thus our focus is to find an algorithm that allows us to maximize HBM2 bandwidth. That also means we can use HBM2 bandwidth benchmarks to exactly estimate FFT performance.

The peak HBM2 performance for a batch 1 implementation, with two independent kernels in the same device, is 291 GBytes/Sec. When pipelining/batching a peak bandwidth of 337 GBytes/Sec is possible.

We were pleased to be able to quickly port our 2D FFT from an OpenCL version to oneAPI. While the work to optimize the approach for an HBM2-enabled FPGA card was already done, it still gives OpenCL-based users an excellent path to move to oneAPI on BittWare hardware with minimal impact.

Working with HBM2 on FPGAs allows for some significant advantages in certain applications such as we have seen here. If you are seeking more information on the code we presented, please get in touch using the form below to inquire about availability, or to learn more about the 520N-MX FPGA card from BittWare.

You can request the open-source TAR file by filling out this form. The code you will receive has open source legends.

We compile the OneAPI 2D FFT into a Linux dynamically linked, shared object library. Just type “cmake .” to create that library.

We call that library from a simple NumPy script which creates some input data and then either validates or plots the output. Type “python runfft.py” to see a graph.

The software above was tested on Centos 8 with the Intel OneAPI tools Beta 10 release using a BittWare 520N-MX card which hosts an MX2100 FPGA. The released OpenCL board support package for that card also allows OneAPI to work

"*" indicates required fields

Article Homomorphic Encryption Acceleration FPGA acceleration enables this unique solution that allows compute on encrypted data without decrypting or sharing keys Traditional Encryption Limits Encrypting

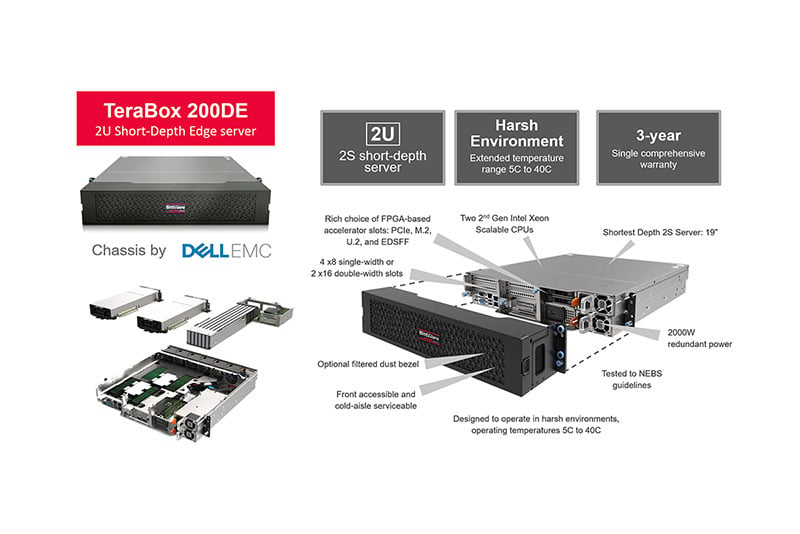

BittWare On-Demand Webinar Enterprise-class FPGA Servers: The TeraBox Approach FPGA-based cards are maturing into critical devices for data centers and edge computing. However, there’s a

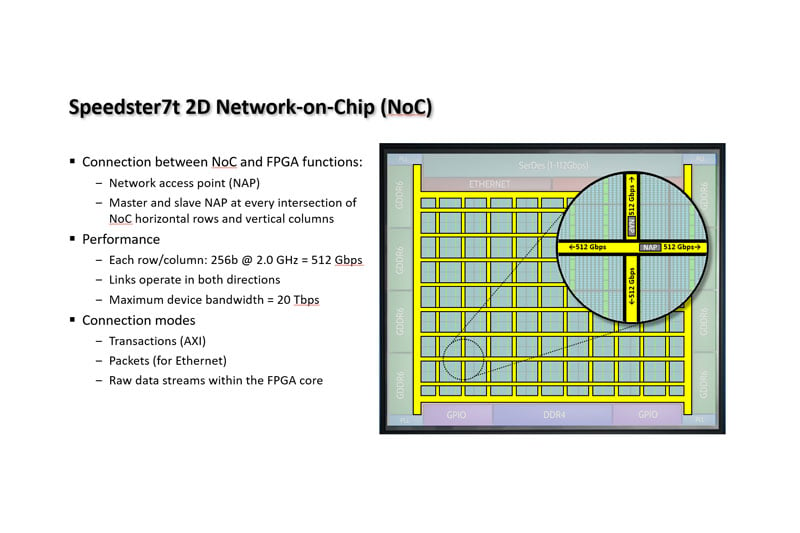

BittWare Webinar Introducing VectorPath S7t-VG6 Accelerator Card Now available on demand: In this webinar, Achronix® and Bittware will discuss the growing trends of using PCIe

High-speed networking can make timestamping a challenge. Learn about possible solutions including card timing kits and the Atomic Rules IP TimeServo.