TeraBox 2102D FPGA Server

FPGA Server TeraBox 2102D 2U Server for FPGA Cards Legacy Product Notice: This is a legacy product and is not recommended for new designs. It

You may already know of oneAPI™ as a faster, easier way to develop hardware accelerators, particularly on Altera FPGAs. However, not every application is suitable, and often will take some initial development to establish baseline performance.

Could your application benefit from oneAPI™?

Staring with our enterprise-class accelerator cards is an excellent choice. Our cards with oneAPI support are shown below, along with details to get you started.

Want more details about oneAPI? Jump to our More About oneAPI section.

Ready to get hardware or have questions? Jump to our Where to Buy and Contact section.

With decades of experience supplying high-level FPGA tools, we know what customers need from the start: a fast onramp to evaluate key performance metrics like F-max on your design. That’s why our standard Accelerator Support Package (ASP) is optimized for high-performance computing. Some key benefits of choosing a BittWare accelerator card supporting oneAPI as a starting point:

Explore using oneAPI with our 2D FFT demo no the 520N-MX card featuring HBM2. Be sure to request the code download at the bottom of the page!

We recommend these cards for oneAPI as we have the latest tools support, ASP (HPC focused), and each has a high-performance Altera FPGA.

Our accelerator cards with Altera Agilex devices are all candidates for oneAPI support, however by not choosing a recommended card, building up an ASP to even evaluate performance will be a significant development project.

That’s why we recommend starting with these cards which have an HPC-optimized ASP ready for you. We can discuss other platforms for volume deployments.

Need the power of HBM2 memory for your oneAPI appliation? Our IA-860m will be added to our recommended card for oneAPI development. Contact us to get updated when it’s ready.

In oneAPI terms, the ASP (formerly called the Board Support Package or BSP), is the inner ring that bridges your SYCL code and the card’s hardware. It’s what enables features, defines where oneAPI resides physically on the chip (floor plan), and really is the defining element of performance potential within the scope of a particular accelerator.

The Acceleration Support Package (ASP) plays a big role in performance.

The ASP—your board vendor’s specific oneAPI implementation—defines how the oneAPI code interfaces with hardware resources on both the chip and card level. There are many variables which can result in lower or higher performance, more or less silicon resource usage, and I/O features available.

It’s important to consider your board vendor as much as it is choosing the right FPGA. That’s why BittWare focuses on high-performance oneAPI ASP development as a baseline–adding only features as required. Starting with a lower-performance (though perhaps with more features) ASP can result in less than ideal results that may mask how oneAPI would actually perform for you.

Customers can customize for more features as required.

Should you need more features than our ASP provides, you’re already working with enterprise-class hardware that has additional I/O that can be enabled. Getting these resources enabled with oneAPI can be done with your own team, or talk to us about more customization options.

Contact us to get a quote or recommendation for getting a customized ASP that meets your project needs.

You may have heard several more terms related to oneAPI, the Open FPGA Stack (OFS) and FPGA Interface Manager (FIM), the Accelerator Functional Unit (AFU). How do these relate to the Accelerator Support package (ASP)? Why do you sometimes see a reference to a Board Support Package (BSP)?

OFS is the highest-order component, however it’s important to not think of oneAPI as comprising all that OFS offers. Such an implementation would not be resource-efficient and challenging to maintain. It’s better to consider OFS as a broad library of features, with a particular oneAPI implementation as offering a subset of these.

This is why, when comparing various “supports OFS” boards, that doesn’t give you much information on what features, performance, or resource usages are implemented. You’ve got to dig further into how the ASP (formerly called BSP) is implemented.

The next “level down” is comprised of the FIM—the FPGA Interface Manager. This defines the particular interface to hardware features, including those on the FPGA itself, and the oneAPI software. You can think of the FIM as an OFS-based shell. If you have RTL programming resources, you can add/remove features from the FIM.

Working “inside” of the FIM is the AFU, the actual algorithm or processing unit that provides acceleration. You can think of this as your user application space, with development using software tools but the advantages of hardware instantiation.

Lastly, the ASP brings together these components: hardware interfaces and how they interact with the oneAPI code plus host software tools like Quartus for development. You’ll see reference to a BSP (board support package) and oneAPI; this is a similar term for the ASP. The best way to think of the ASP is as the component that turns a FIM (with its user application AFU area) into a target for oneAPI.

What are the next steps? What’s the typical flow from development to deployment?

Choose the right card with a quality ASP.

Not all cards you see advertised with “oneAPI” are going to be suitable for evaluation. We’ve chosen to implement our ASPs on cards with Altera F-Series, I-Series, and soon M-Series Agilex FPGAs. Check out our list of recommended accelerator boards and are ready for a discussion on what’s the best fit for you.

Get the basics.

With hardware in hand, you’ll be using Altera Quartus Design Software (sold separately), and oneAPI tools. With BittWare, we have our Developer site to guide you as part of your accelerator board purchase.

Develop faster with oneAPI.

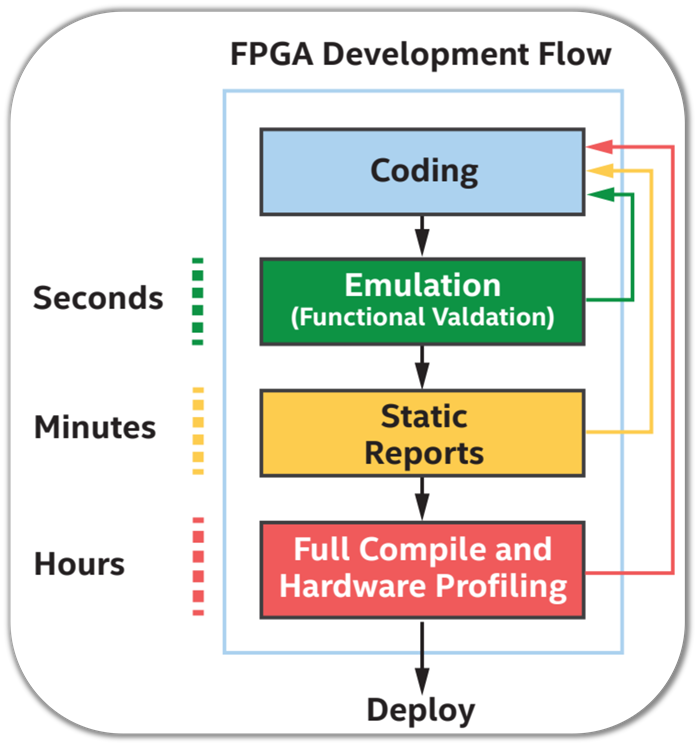

If you’re used to native RTL development, oneAPI will be a welcome improvement as full compile runs are reduced. Thanks to test bench emulation and reports, these steps are completed in seconds/minutes. Moving on to full compiles, you can use the Vtune to further refine your project.

Good news if you started with a BittWare accelerator.

By starting with BittWare accelerators, your deployment can be on the same cards you’ve been using in development! Talk to us about volume requirements depending on your needs.

What is oneAPI?

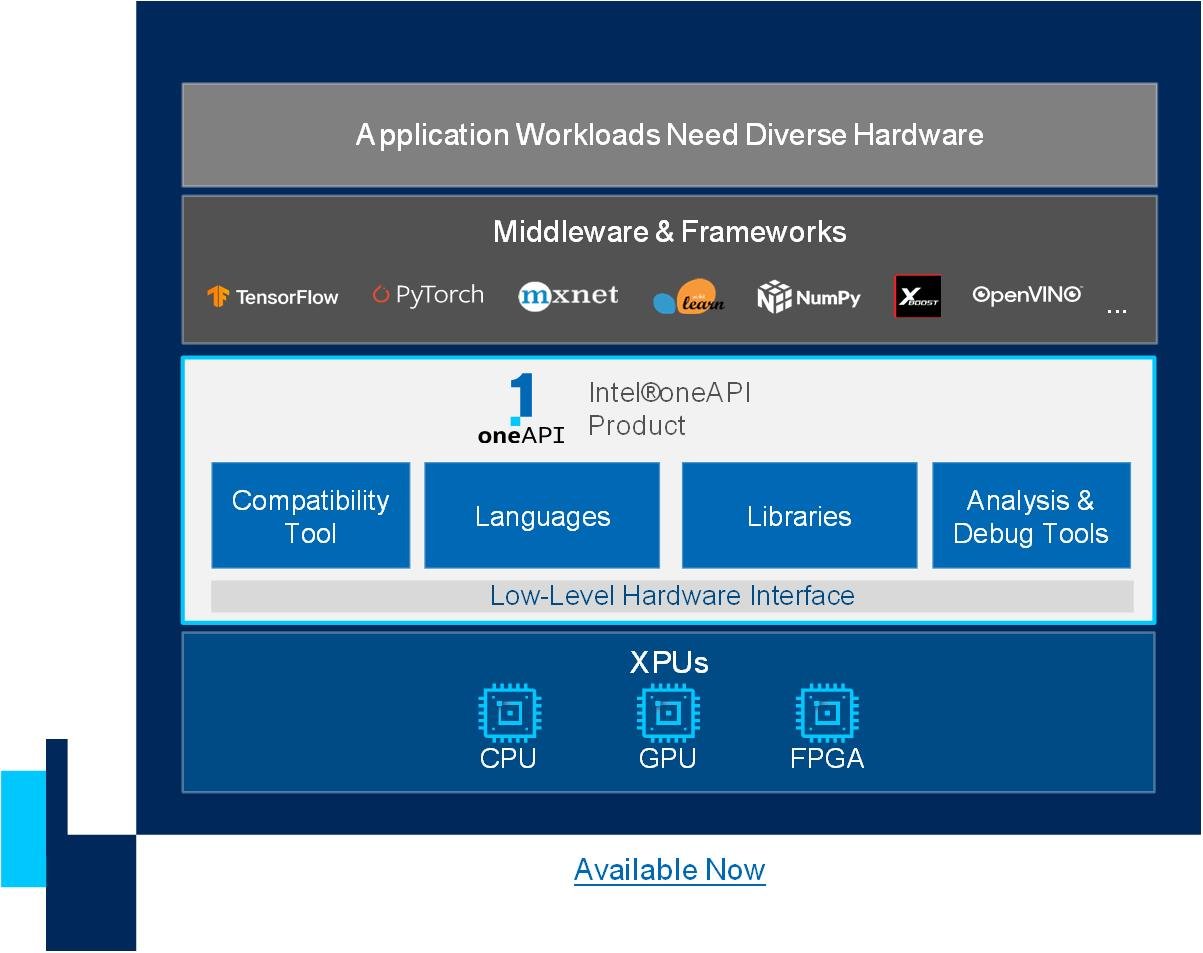

oneAPI is a cross-industry, open, standards-based unified programming model that delivers a common developer experience across accelerator architectures—for faster application performance, more productivity, and greater innovation. The oneAPI industry initiative encourages collaboration on the oneAPI specification and compatible oneAPI implementations across the ecosystem.

oneAPI provides libraries for compute and data intensive domains. They include deep learning, scientific computing, video analytics, and media processing.

The oneAPI specification extends existing developer programming models to enable a diverse set of hardware through language, a set of library APIs, and a low level hardware interface to support cross-architecture programming. To promote compatibility and enable developer productivity and innovation, the oneAPI specification builds upon industry standards and provides an open, cross-platform developer stack.

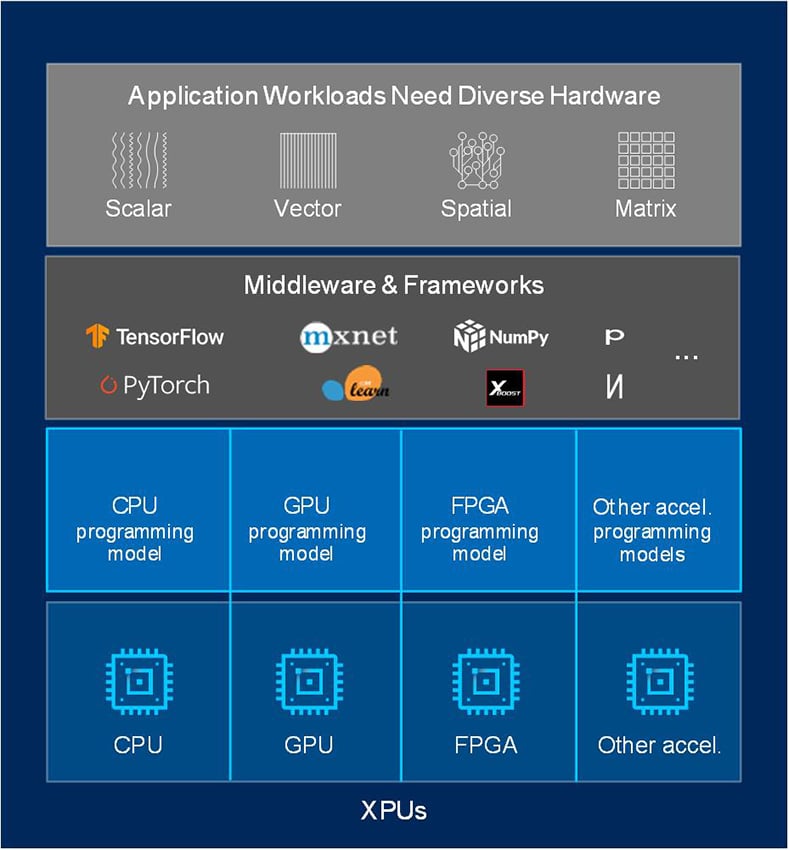

In today’s HPC landscape, several hardware architectures are available for running workloads – CPUs, GPUs, FPGAs, and specialized accelerators. No single architecture is best for every workload, so using a mix of architectures leads to the best performance across the most scenarios. However, this architecture diversity leads to some challenges:

Each architecture requires separate programming models and toolchains:

Software development complexity limits freedom of architectural choice.

OneAPI delivers a unified programming model that simplifies development across diverse architectures. With the oneAPI programming model, developers can target different hardware platforms with the same language and libraries and can develop and optimize code on different platforms using the same set of debug and performance analysis tools – for instance, get run-time data across their host and accelerators through the Vtune profiler.

Using the same language across platforms and hardware architectures makes source code easier to re-use; even if platform specific optimization is still required when code is moved to a different hardware architecture, no code translation is required anymore. And using a common language and set of tools results in faster training for new developers, faster debug and higher productivity.

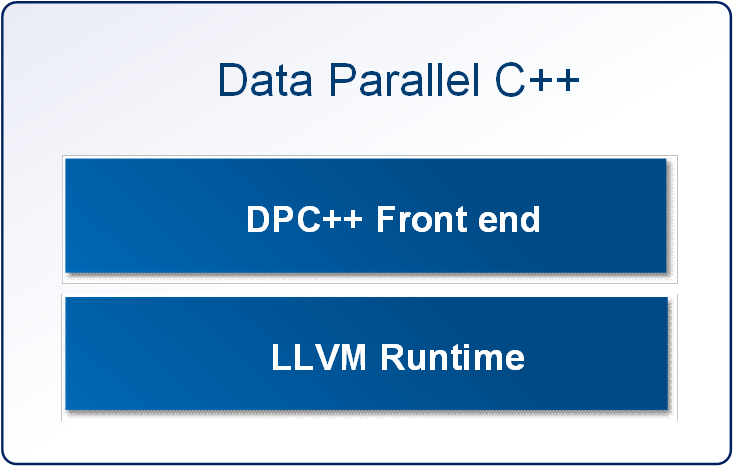

The oneAPI language is Data Parallel C++, a high-level language designed for parallel programming productivity and based on the C++ language for broad compatibility. DPC++ is not a proprietary language; its development is driven by an open cross-industry initiative.

Language to deliver uncompromised parallel programming productivity and performance across CPUs and accelerators:

Based on C++:

Community Project to drive language enhancements:

One of the main problems when compiling code for FPGA is compile time – the backend compile process required for translating DPC++ code into a timing closed FPGA design implementing the hardware architecture specified by that code can take hours to complete. So, the FPGA development flow has been tailored to minimize full compile runs.

Our technical sales team is ready to provide availability and configuration information, or answer your technical questions.

"*" indicates required fields

FPGA Server TeraBox 2102D 2U Server for FPGA Cards Legacy Product Notice: This is a legacy product and is not recommended for new designs. It

BittWare On-Demand Webinar Computational Storage Using Intel® Agilex™ FPGAs: Bringing Acceleration Closer to Data Watch Now on Demand! Accelerating NVMe storage means moving computation, such

Build ultra-low latency apps for fintech using Enyx off-the-shelf solutions and the nxFramework.

Intel® oneAPI™ High-level FPGA Development Menu Evaluating oneAPI Accelerator Cards ASPs More Info Contact/Where to Buy Is oneAPI Right for You? You may already know